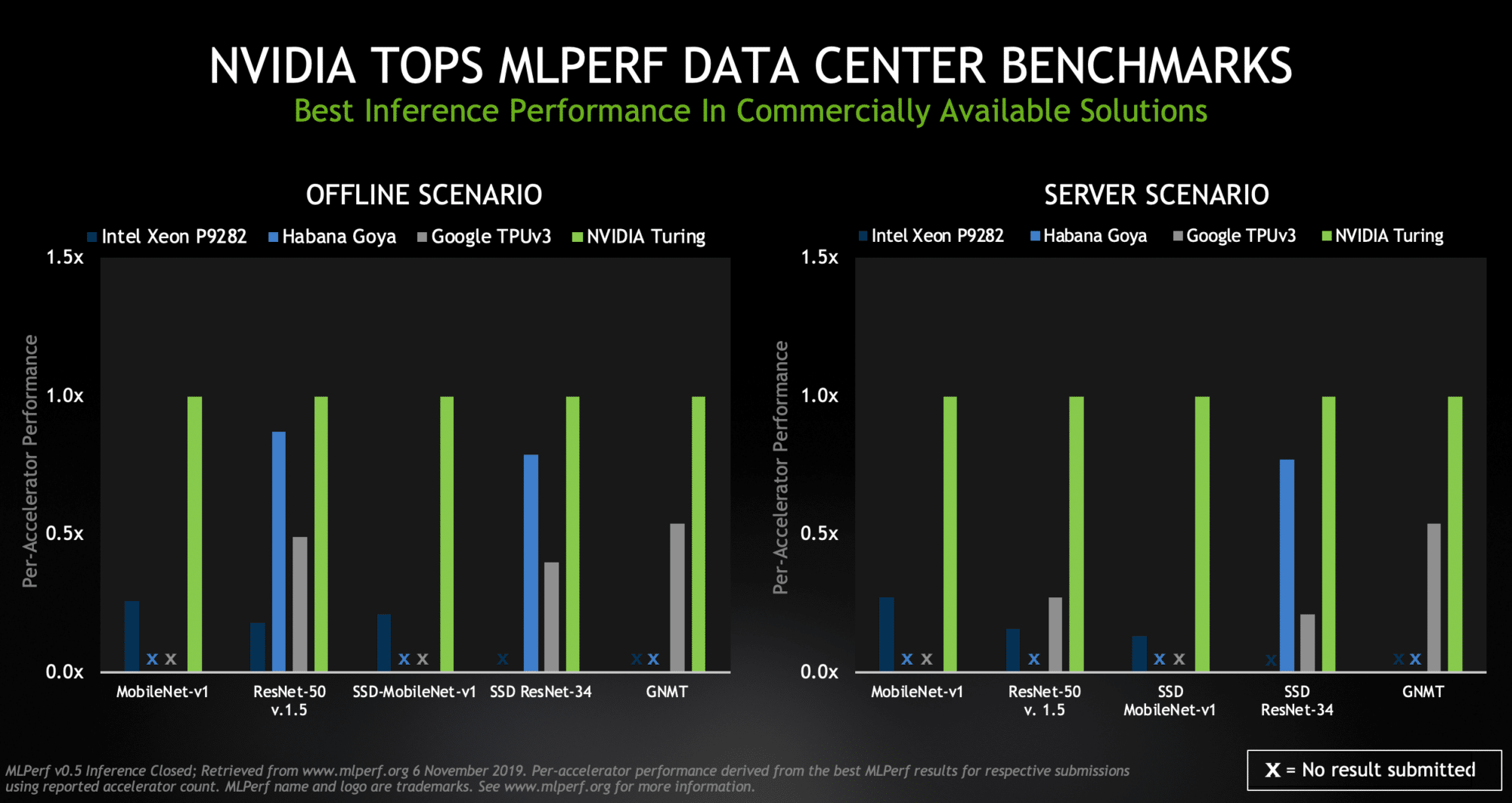

Nvidia Wins New Ai Inference Benchmarks Nvidia Newsroom The results of the industry’s first independent suite of ai benchmarks for inference, called mlperf inference 0.5, demonstrate the performance of nvidia turing™ gpus for data centers and nvidia xavier™ system on a chip for edge computing. Nvidia posted the fastest results on new mlperf benchmarks measuring the performance of ai inference workloads in data centers and at the edge.

Nvidia Wins New Ai Inference Benchmarks Nvidia Newsroom Nvidia today posted the fastest results on new benchmarks measuring the performance of ai inference workloads in data centers and at the edge — building on the company’s equally strong position in recent benchmarks measuring ai training. In the latest mlperf inference v5.0 benchmarks, which reflect some of the most challenging inference scenarios, the nvidia blackwell platform set records — and marked nvidia’s first mlperf submission using the nvidia gb200 nvl72 system, a rack scale solution designed for ai reasoning. The gpu maker said it posted the fastest results on new mlperf inference benchmarks, which measured the performance of ai inference workloads in data centers and at the edge. mlperf's. The results of the industry’s first independent suite of ai benchmarks for inference, called mlperf inference 0.5, demonstrate the performance of nvidia turing™ gpus for data centers and nvidia xavier™ system on a chip for edge computing.

Nvidia Turing Xavier Lead In Mlperf Ai Inference Benchmarks Nvidia Blog The gpu maker said it posted the fastest results on new mlperf inference benchmarks, which measured the performance of ai inference workloads in data centers and at the edge. mlperf's. The results of the industry’s first independent suite of ai benchmarks for inference, called mlperf inference 0.5, demonstrate the performance of nvidia turing™ gpus for data centers and nvidia xavier™ system on a chip for edge computing. The brain of the drive agx platform leads mlperf inference tests. running a wide range of diverse deep neural networks. this is a massive ai workload, and the new mlperf inference 0.5 benchmark suite gives great insight into the true performance of solutions bein. The a100 retains leadership in many benchmarks versus other available products while the nvidia jetson agx orin leads in edge computing. every three months we share our perspectives with you as the mlcommons consortium coordinates the publication of new ai benchmarks for inference and training. Nvidia unveils world’s first long context ai that serves 32x more users live designed for blackwell, helix reshapes long context decoding and sets a new benchmark for fast, multi user ai. Nvidia today announced its ai computing platform has again smashed performance records in the latest round of mlperf, extending its lead on the industry’s only independent benchmark measuring ai performance of hardware, software and services.

Nvidia Turing Xavier Lead In Mlperf Ai Inference Benchmarks Nvidia Blog The brain of the drive agx platform leads mlperf inference tests. running a wide range of diverse deep neural networks. this is a massive ai workload, and the new mlperf inference 0.5 benchmark suite gives great insight into the true performance of solutions bein. The a100 retains leadership in many benchmarks versus other available products while the nvidia jetson agx orin leads in edge computing. every three months we share our perspectives with you as the mlcommons consortium coordinates the publication of new ai benchmarks for inference and training. Nvidia unveils world’s first long context ai that serves 32x more users live designed for blackwell, helix reshapes long context decoding and sets a new benchmark for fast, multi user ai. Nvidia today announced its ai computing platform has again smashed performance records in the latest round of mlperf, extending its lead on the industry’s only independent benchmark measuring ai performance of hardware, software and services.