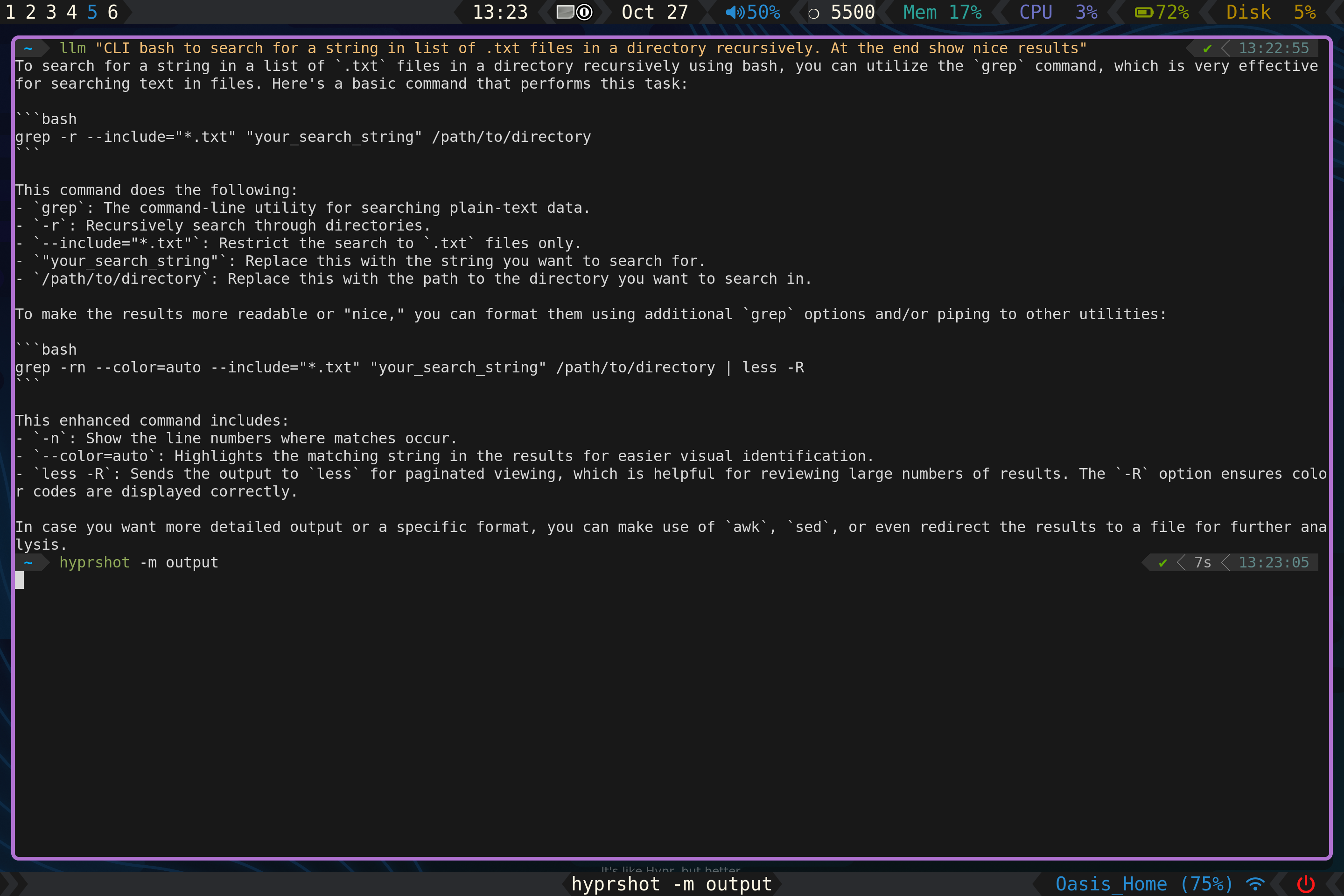

Running Custom Gguf Models With Ollama Learning Deep Learning Learn how to install a custom hugging face gguf model using ollama, enabling you to try out the latest llm models locally. this guide covers downloading the model, creating a modelfile, and setting up the model in ollama and open webui. We’re on a journey to advance and democratize artificial intelligence through open source and open science.

Running Custom Gguf Models With Ollama Here are the steps to create custom models. create a modelfile and input necessary things. create a model out of this modelfile and run it locally in the terminal. In this video, i am demonstrating how you can create a custom models locally using the model from huggingface with ollama. by the end of the video, you will be able to create a simple. In this blog post, we’re going to look at how to download a gguf model from hugging face and run it locally. there are over 1,000 models on hugging face that match the search term gguf, but we’re going to download the thebloke mistrallite 7b gguf model. we’ll do this using the hugging face hub cli, which we can install like this:. In this article, we’ll go through the steps to setup and run llms from huggingface locally using ollama. for this tutorial, we’ll work with the model zephyr 7b beta and more specifically zephyr 7b beta.q5 k m.gguf. 1. downloading the model. to download the model from hugging face, we can either do that from the gui. or by using the hugging face cli.

Run Custom Gguf Model On Ollama In this blog post, we’re going to look at how to download a gguf model from hugging face and run it locally. there are over 1,000 models on hugging face that match the search term gguf, but we’re going to download the thebloke mistrallite 7b gguf model. we’ll do this using the hugging face hub cli, which we can install like this:. In this article, we’ll go through the steps to setup and run llms from huggingface locally using ollama. for this tutorial, we’ll work with the model zephyr 7b beta and more specifically zephyr 7b beta.q5 k m.gguf. 1. downloading the model. to download the model from hugging face, we can either do that from the gui. or by using the hugging face cli. In this article, we'll show you how to download open source models from hugging face, transform, and use them in your local ollama setup. ollama is a powerful tool that simplifies the process of creating, running, and managing large language models (llms). hugging face is a machine learning platform that's home to nearly 500,000 open source models. In this tutorial i'll demonstrate how to import any large language model from huggingface and run it locally on your machine using ollama, specifically focusing on gguf files. as an example, i'll use the capybarahermes model from "thebloke". Bring any huggingface model to ollama ! as of february’2024, there are just approximately 100 models in ollama library. on the other hand, huggingface has more than 500k models as of now. With these simple steps, you can easily download, install, and run your own models using ollama. whether you're exploring the capabilities of llama or building your own custom models, ollama makes it accessible and efficient.

Run Custom Gguf Model On Ollama In this article, we'll show you how to download open source models from hugging face, transform, and use them in your local ollama setup. ollama is a powerful tool that simplifies the process of creating, running, and managing large language models (llms). hugging face is a machine learning platform that's home to nearly 500,000 open source models. In this tutorial i'll demonstrate how to import any large language model from huggingface and run it locally on your machine using ollama, specifically focusing on gguf files. as an example, i'll use the capybarahermes model from "thebloke". Bring any huggingface model to ollama ! as of february’2024, there are just approximately 100 models in ollama library. on the other hand, huggingface has more than 500k models as of now. With these simple steps, you can easily download, install, and run your own models using ollama. whether you're exploring the capabilities of llama or building your own custom models, ollama makes it accessible and efficient.

Ollama Running Gguf Models From Hugging Face Mark Needham Bring any huggingface model to ollama ! as of february’2024, there are just approximately 100 models in ollama library. on the other hand, huggingface has more than 500k models as of now. With these simple steps, you can easily download, install, and run your own models using ollama. whether you're exploring the capabilities of llama or building your own custom models, ollama makes it accessible and efficient.