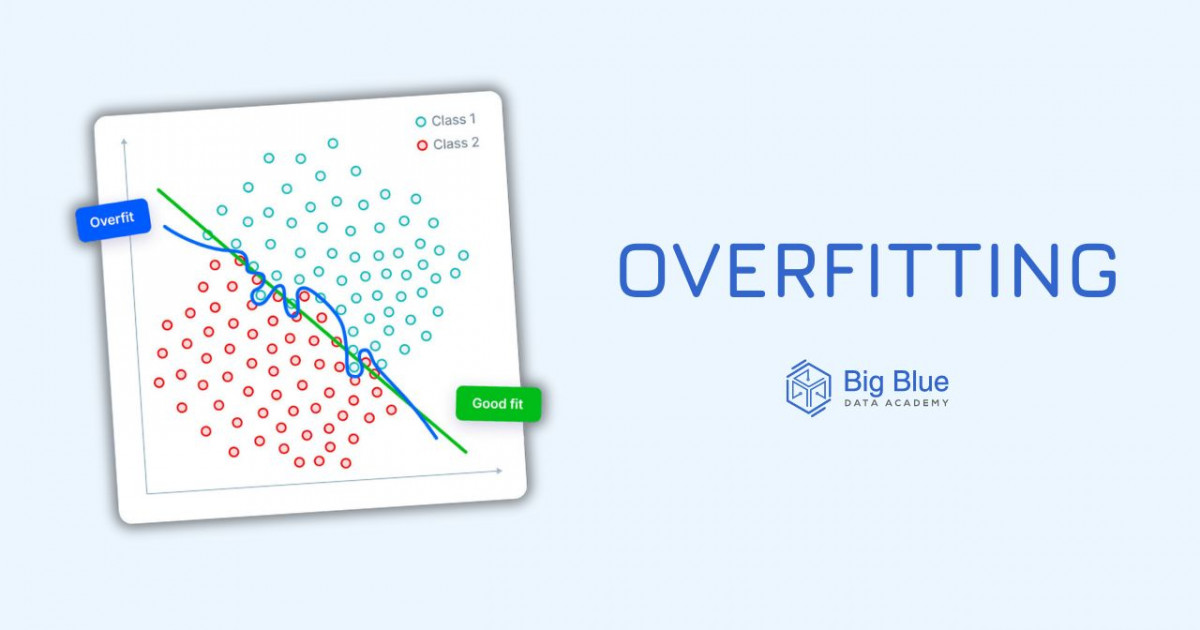

Mastering Overfitting And Underfitting In Machine Learning Overfitting for neural networks isn't just about the model over memorizing, its also about the models inability to learn new things or deal with anomalies. detecting overfitting in black box model: interpretability of a model is directly tied to how well you can tell a models ability to generalize. So, overfitting in my world is treating random deviations as systematic. overfitting model is worse than non overfitting model ceteris baribus. however, you can certainly construct an example when the overfitting model will have some other features that non overfitting model doesn't have, and argue that it makes the former better than the latter.

Overfitting And Underfitting In Machine Learning Animated Guide For 0 overfitting and underfitting are basically inadequate explanations of the data by an hypothesized model and can be seen as the model overexplaining or underexplaining the data. this is created by the relationship between the model used to explain the data and the model generating the data. Firstly, i have divided the data into train and test data for cross validation. after cross validation i have built a xgboost model using below parameters: n estimators = 100 max depth=4 scale pos weight = 0.2 as the data is imbalanced (85%positive class) the model is overfitting the training data. what can be done to avoid overfitting?. Understanding overfitting and underfitting and model selection ask question asked 5 years, 8 months ago modified 5 years, 7 months ago. Empirically, i have not found it difficult at all to overfit random forest, guided random forest, regularized random forest, or guided regularized random forest. they regularly perform very well in cross validation, but poorly when used with new data due to over fitting. i believe it has to do with the type of phenomena being modeled. it's not much of a problem when modeling a mechanical.

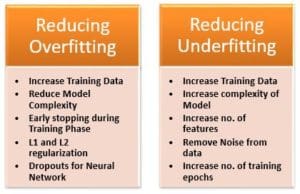

Overfitting And Underfitting In Machine Learning Animated Guide For Understanding overfitting and underfitting and model selection ask question asked 5 years, 8 months ago modified 5 years, 7 months ago. Empirically, i have not found it difficult at all to overfit random forest, guided random forest, regularized random forest, or guided regularized random forest. they regularly perform very well in cross validation, but poorly when used with new data due to over fitting. i believe it has to do with the type of phenomena being modeled. it's not much of a problem when modeling a mechanical. How much is overfitting? for example, results on seen data is between 1 to 15% better than unseen data. is there a range of value for example 2% where it is considered normal and not overfitting?. Why does a cross validation procedure overcome the problem of overfitting a model?. A common way to reduce overfitting in a machine learning algorithm is to use a regularization term that penalizes large weights (l2) or non sparse weights (l1) etc. how can such regularization reduce. How i can interpret that is overfitting, underfitting , or a good model? i thought if i divide as like that: 157000 120000 and 157000 265000 i can get some inferences from them.

Overfitting And Underfitting In Machine Learning Animated Guide For How much is overfitting? for example, results on seen data is between 1 to 15% better than unseen data. is there a range of value for example 2% where it is considered normal and not overfitting?. Why does a cross validation procedure overcome the problem of overfitting a model?. A common way to reduce overfitting in a machine learning algorithm is to use a regularization term that penalizes large weights (l2) or non sparse weights (l1) etc. how can such regularization reduce. How i can interpret that is overfitting, underfitting , or a good model? i thought if i divide as like that: 157000 120000 and 157000 265000 i can get some inferences from them.

What Is Machine Learning The Ultimate Beginner S Guide A common way to reduce overfitting in a machine learning algorithm is to use a regularization term that penalizes large weights (l2) or non sparse weights (l1) etc. how can such regularization reduce. How i can interpret that is overfitting, underfitting , or a good model? i thought if i divide as like that: 157000 120000 and 157000 265000 i can get some inferences from them.

Overfitting In Machine Learning Guide 2024