Performance Metrics Evaluation For Machine Learning Models Here, we introduce the most common evaluation metrics used for the typical supervised ml tasks including binary, multi class, and multi label classification, regression, image segmentation,. To evaluate the performance of classification models, we use the following metrics: 1. accuracy is a fundamental metric used for evaluating the performance of a classification model. it tells us the proportion of correct predictions made by the model out of all predictions.

Machine Learning Models Performance Metrics Download Scientific Diagram Evaluation metrics are quantitative measures used to assess the performance and effectiveness of a statistical or machine learning model. these metrics provide insights into how well the model is performing and help in comparing different models or algorithms. Performance metrics are a part of every machine learning pipeline. they tell you if you’re making progress, and put a number on it. all machine learning models, whether it’s linear regression, or a sota technique like bert, need a metric to judge performance. Model evaluation metrics are used to explain the performance of metrics. model performance metrics aim to discriminate among the model results. making a machine learning model. Machine learning models are the modern data driven solution engines, but how would one tell if they were doing their job well? this would be where model evaluation metrics come in: to help one understand the strengths and weaknesses of a model with a view to optimization and real world application.

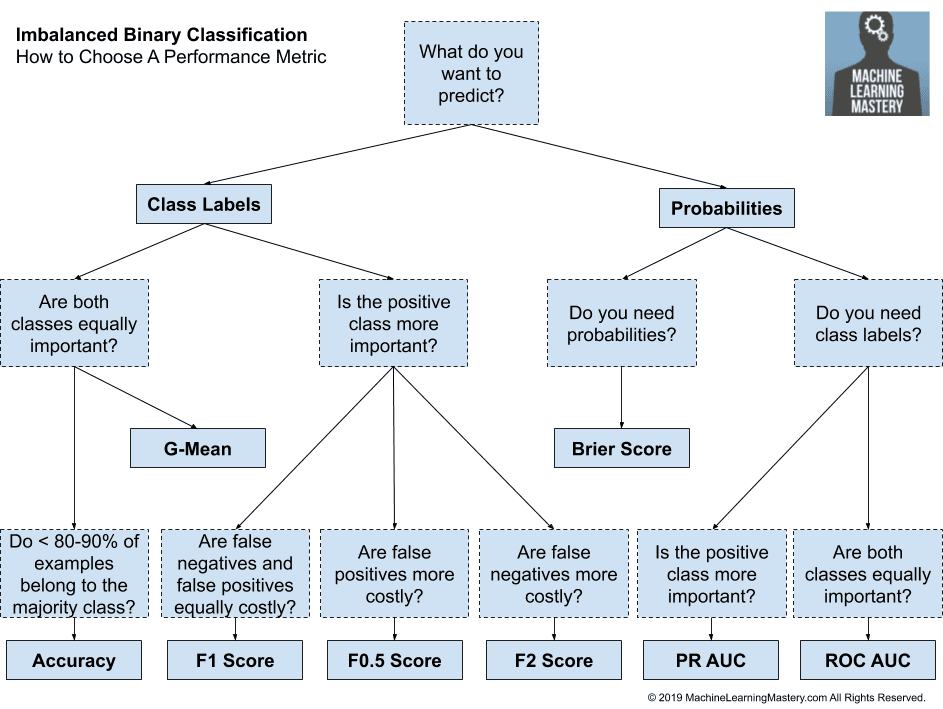

Machine Learning Evaluation Metrics In R Model evaluation metrics are used to explain the performance of metrics. model performance metrics aim to discriminate among the model results. making a machine learning model. Machine learning models are the modern data driven solution engines, but how would one tell if they were doing their job well? this would be where model evaluation metrics come in: to help one understand the strengths and weaknesses of a model with a view to optimization and real world application. Performance metrics serve as the cornerstone of machine learning model evaluation and improvement. they provide the quantitative means to assess model effectiveness, compare different approaches, inform decision making, drive iterative improvement, and adapt to changing conditions. When selecting machine learning models, it’s critical to have evaluation metrics to quantify the model performance. in this post, we’ll focus on the more common supervised learning problems. there are multiple commonly used metrics for both classification and regression tasks. Evaluating machine learning models isn’t just about high accuracy it’s about using the right metrics for the right context. in this guide, we take a deep dive into various evaluation metrics like. In simple terms, performance metrics in machine learning are used to measure the accuracy, efficiency, and effectiveness of a model. these metrics help data scientists and engineers understand whether a model is making correct predictions or needs improvement.