Run Deepseek R1 On Your Local Machine Using Ollama Upstash Blog Eroppa In this mini tutorial, we will show you how to run deepseek r1 on your local machine using ollama. we will run deepseek r1 on the command line and also run it in a javascript node application. In this guide, we’ll walk you through how to deploy deepseek r1 locally on your machine using ollama and interact with it through the open web ui. whether you're a developer or an ai enthusiast, you’ll learn how to set up and use this cutting edge ai model step by step.

100 Private Ai How To Run Deepseek R1 On Your Local Machine Seeb4coding In this guide, we’ll walk you through how to deploy deepseek r1 locally on your machine using ollama and interact with it through the open web ui. whether you're a developer or an ai enthusiast, you’ll learn how to set up and use this cutting edge ai model step by step. Discover how to run the powerful deepseek r1 ai model locally on your machine using ollama. this guide provides step by step instructions for macos, windows, and ubuntu, allowing you to harness the capabilities of a state of the art language model without relying on the cloud. Learn how to set up and run the deepseek r1 model on your local machine using ollama, covering hardware requirements, installation steps, and client usage. Set up ollama and open web ui to run the deepseek r1 0528 model locally. download and configure the 1.78 bit quantized version (iq1 s) of the model. run the model using both gpu cpu and cpu only setups. step 0: prerequisites to run the iq1 s quantized version, your system must meet the following requirements:.

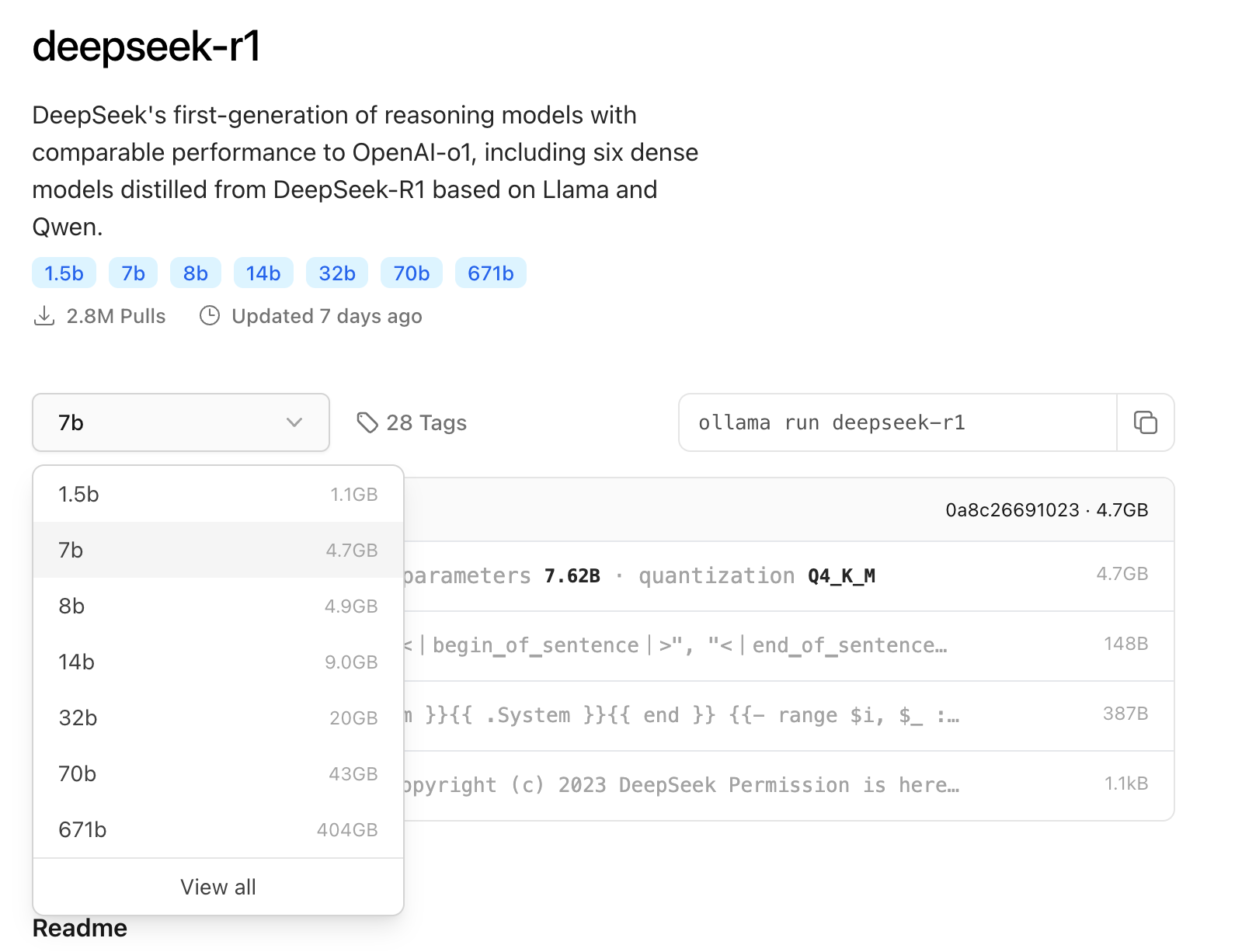

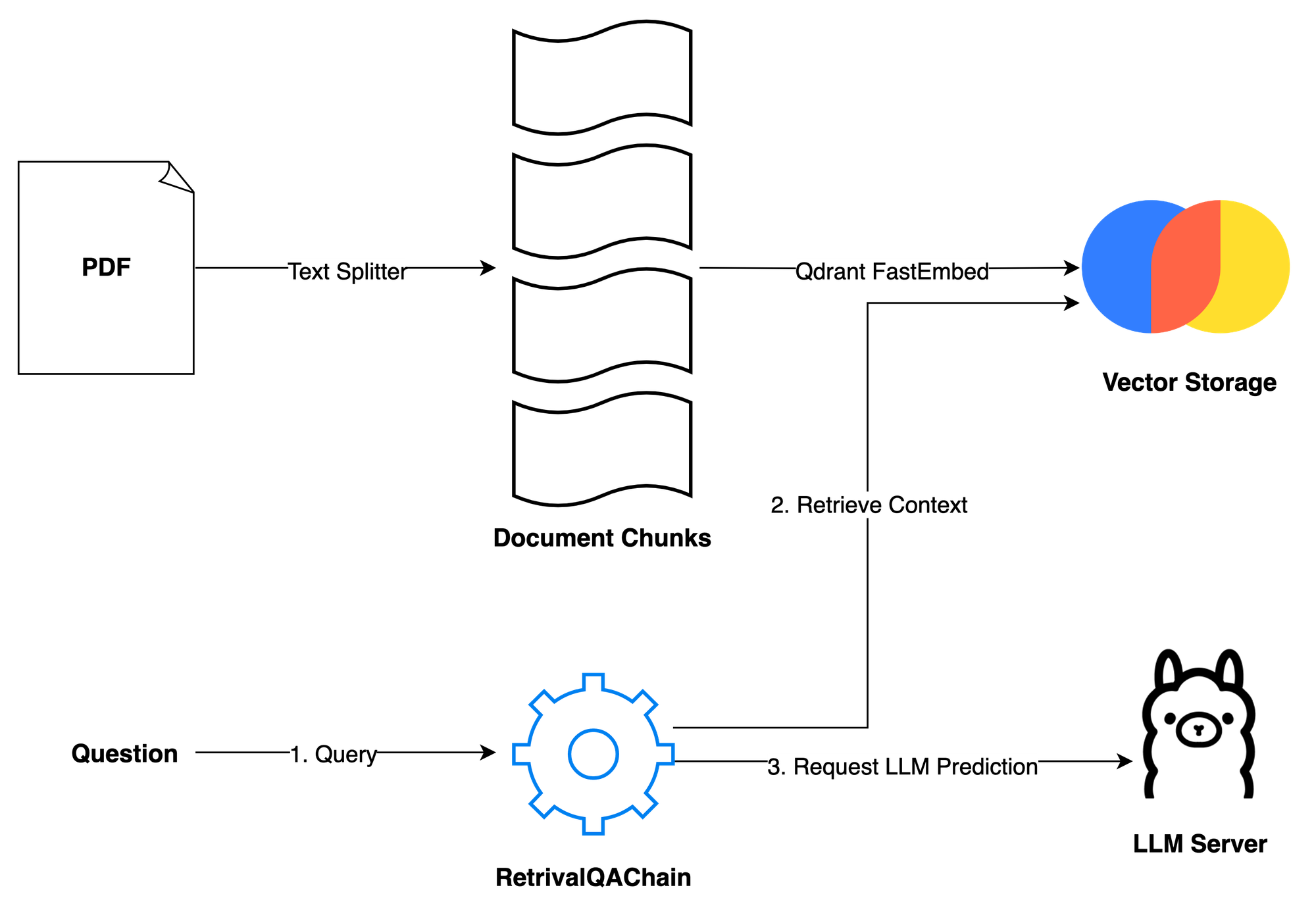

Using Ollama Run Deepseek R1 Llm In Your Local Machine Without Internet Learn how to set up and run the deepseek r1 model on your local machine using ollama, covering hardware requirements, installation steps, and client usage. Set up ollama and open web ui to run the deepseek r1 0528 model locally. download and configure the 1.78 bit quantized version (iq1 s) of the model. run the model using both gpu cpu and cpu only setups. step 0: prerequisites to run the iq1 s quantized version, your system must meet the following requirements:. Setting up deepseek r1 locally with ollama. download ollama from the official website. install it on your machine, just like any application. check ollama’s documentation for platform specific support. it’s available on macos and some linux distributions. ollama makes model retrieval simple:. In this guide, we’ll walk through the entire process of deploying deepseek r1, a powerful open source llm, on your local machine using ollama as the backend and open webui for a. Running deepseek r1 locally a comprehensive guide using ollama in this tutorial, i’ll explain step by step how to run deepseek r1 locally and how to set it up using ollama. we’ll also explore building a simple rag application that runs on your laptop using the r1 model, langchain, and gradio. Run deepseek r1 on your local machine using ollama upstash blog how to install deepseek r1 locally using ollama step 1: install ollama. ollama simplifies running llms locally. follow these steps to install it: for macos. visit ollama.ai and download the macos app. drag the ollama icon to your applications folder. open the app to start the.