Langchain Run Language Models Locally Hugging Face Models Artofit Whether you’re a developer, researcher, or ai enthusiast, this guide will help you load llms like the llama 3.1 8b instruct model using hugging face apis. with slight tweaks, you can apply these steps to load any other models available on hugging face. Welcome to meet ai! 🌟 in this video, we're diving into how you can download and run hugging face language models locally on your pc! imagine having a state of the art ai like.

Run Any Hugging Face Spaces Locally Easy Steps By Amrqawasmeh Medium Running models locally offers enhanced flexibility, privacy, and reduced latency, making it ideal for applications that require fast and secure inference. by optimizing performance and following best practices, you can ensure smooth and efficient model execution in your local environment. Did you know you can load most large language models from hugging face directly on your local machine — without relying on platforms like ollama, ai studio, llama.cpp, or any such. Run locally the instructions below are tested on an rtx 4090 on wsl2 ubuntu 22.04. instructions will differ and may not work, depending on your setup. Fortunately, hugging face regularly benchmarks the models and presents a leaderboard to help choose the best models available. hugging face also provides transformers, a python library that streamlines running a llm locally.

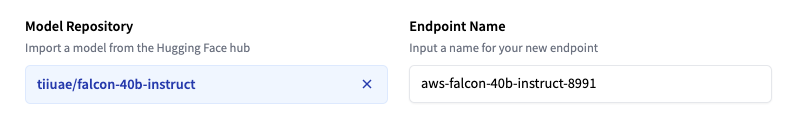

Deploy Llms With Hugging Face Inference Endpoints Run locally the instructions below are tested on an rtx 4090 on wsl2 ubuntu 22.04. instructions will differ and may not work, depending on your setup. Fortunately, hugging face regularly benchmarks the models and presents a leaderboard to help choose the best models available. hugging face also provides transformers, a python library that streamlines running a llm locally. Run hugging face locally on your machine with our step by step guide, mastering inference and model training with ease and speed. But here, i have two solutions to run it, i can: run this ai model remotely: on a server. i send api calls and get responses from the server. that requires an internet connection. run this ai model locally: on the player’s machine. both are valid solutions, but they have advantages and disadvantages. In this post, we'll learn how to download a hugging face large language model (llm) and run it locally. Github di37 running llms locally: a comprehensive guide for running large language models on your local hardware using popular frameworks like llama.cpp, ollama, huggingface transformers, vllm, and lm studio.