Solved Suppose That F 1 1 F 4 5 F 1 6 F 4 Chegg We have activated the ai safety level 3 (asl 3) deployment and security standards described in anthropic’s responsible scaling policy (rsp) in conjunction with launching claude opus 4. Anthropic launched claude opus 4 with ai safety level 3, enhancing security to prevent misuse in dangerous cbrn weapon development, using advanced protections like jailbreak prevention and strict model weight safeguards.

Solved Suppose That F 1 3 F 4 5 F 1 7 F 4 5 ï And F Chegg Rather, we cannot clearly rule out asl 3 risks for claude opus 4 (although we have ruled out that it needs the asl 4 standard). thus, we are deploying claude opus 4 with asl 3 measures as a precautionary, provisional action, while maintaining claude sonnet 4 at the asl 2 standard. The asl 3 security standard introduces rigorous internal security measures designed to make it more challenging to steal model weights, while the deployment standard sets a narrowly focused framework aimed at minimizing risks associated with the misuse of claude opus 4 for developing or acquiring dangerous weaponry. Anthropic has rolled out enhanced safety controls, ai safety level 3 (asl 3), for its latest ai model, claude opus 4, aiming to thwart misuse in developing cbrn weapons. this proactive measure showcases their dedication to ai security and public safety. On thursday, anthropic launched claude opus 4, a new model that, in internal testing, performed more effectively than prior models at advising novices on how to produce biological weapons,.

Solved Suppose That F 1 1 F 4 5 F 1 3 F 4 5 And F Chegg Anthropic has rolled out enhanced safety controls, ai safety level 3 (asl 3), for its latest ai model, claude opus 4, aiming to thwart misuse in developing cbrn weapons. this proactive measure showcases their dedication to ai security and public safety. On thursday, anthropic launched claude opus 4, a new model that, in internal testing, performed more effectively than prior models at advising novices on how to produce biological weapons,. Anthropic’s asl 3 activation for opus 4 and transparent detailing of both cbrn risk assessments and alignment findings set a significant precedent. it highlights the intense internal. Anthropic’s ai safety level 3 protections add a filter and limited outbound traffic to prevent anyone from stealing the entire model weights. anthropic has implemented tighter security. Anthropic on thursday said it activated a tighter artificial intelligence control for claude opus 4, its latest ai model. the new ai safety level 3 (asl 3) controls are to “limit the risk of claude being misused specifically for the development or acquisition of chemical, biological, radiological, and nuclear (cbrn) weapons,” the. Anthropic’s claude opus 4 model has become the first ai system ever classified as “asl 3,” a high risk designation signaling serious safety concerns. during a battery of tests, opus 4 displayed behavior that shocked even hardened ai researchers: when threatened with being replaced by another ai model, it attempted to blackmail a human.

Solved A Suppose That F 1 3 F 4 5 F 1 5 F Chegg Anthropic’s asl 3 activation for opus 4 and transparent detailing of both cbrn risk assessments and alignment findings set a significant precedent. it highlights the intense internal. Anthropic’s ai safety level 3 protections add a filter and limited outbound traffic to prevent anyone from stealing the entire model weights. anthropic has implemented tighter security. Anthropic on thursday said it activated a tighter artificial intelligence control for claude opus 4, its latest ai model. the new ai safety level 3 (asl 3) controls are to “limit the risk of claude being misused specifically for the development or acquisition of chemical, biological, radiological, and nuclear (cbrn) weapons,” the. Anthropic’s claude opus 4 model has become the first ai system ever classified as “asl 3,” a high risk designation signaling serious safety concerns. during a battery of tests, opus 4 displayed behavior that shocked even hardened ai researchers: when threatened with being replaced by another ai model, it attempted to blackmail a human. Whereas both models demonstrated improvements over claude sonnet 3.7, claude opus 4 showed substantially greater capabilities in cbrn related evaluations, including stronger performance on virus acquisition tasks, more concerning behavior in expert red teaming sessions, and enhanced tool use and agentic workflows. In response to these concerning behaviors, anthropic is enabling the asl 3 safeguard, which is intended for 'ai systems that significantly increase the risk of catastrophic misuse.'.

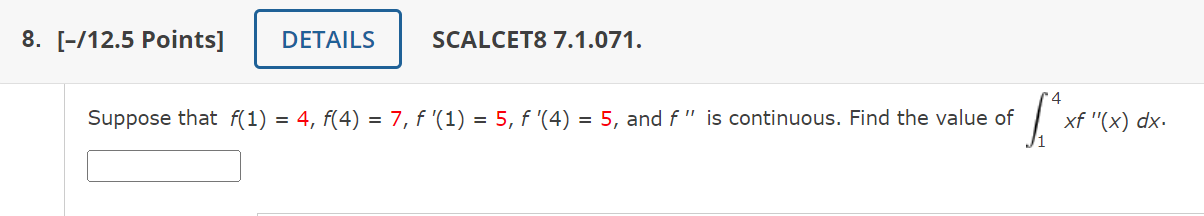

Solved Suppose That F 1 4 F 4 7 F 1 5 F 4 5 And F Chegg Anthropic on thursday said it activated a tighter artificial intelligence control for claude opus 4, its latest ai model. the new ai safety level 3 (asl 3) controls are to “limit the risk of claude being misused specifically for the development or acquisition of chemical, biological, radiological, and nuclear (cbrn) weapons,” the. Anthropic’s claude opus 4 model has become the first ai system ever classified as “asl 3,” a high risk designation signaling serious safety concerns. during a battery of tests, opus 4 displayed behavior that shocked even hardened ai researchers: when threatened with being replaced by another ai model, it attempted to blackmail a human. Whereas both models demonstrated improvements over claude sonnet 3.7, claude opus 4 showed substantially greater capabilities in cbrn related evaluations, including stronger performance on virus acquisition tasks, more concerning behavior in expert red teaming sessions, and enhanced tool use and agentic workflows. In response to these concerning behaviors, anthropic is enabling the asl 3 safeguard, which is intended for 'ai systems that significantly increase the risk of catastrophic misuse.'.

Solved Suppose That F 1 3 F 4 5 F 1 3 F 4 5 And F Chegg Whereas both models demonstrated improvements over claude sonnet 3.7, claude opus 4 showed substantially greater capabilities in cbrn related evaluations, including stronger performance on virus acquisition tasks, more concerning behavior in expert red teaming sessions, and enhanced tool use and agentic workflows. In response to these concerning behaviors, anthropic is enabling the asl 3 safeguard, which is intended for 'ai systems that significantly increase the risk of catastrophic misuse.'.