Spacy Python 78 spacy tags up each of the token s in a document with a part of speech (in two different formats, one stored in the pos and pos properties of the token and the other stored in the tag and tag properties) and a syntactic dependency to its .head token (stored in the dep and dep properties). I need to re deploy an existing very old python gcp cloud function that uses spacy and other nlp stuff (that i am not familiar with) to use a newer python runtime (3.9 or above) . sparing the det.

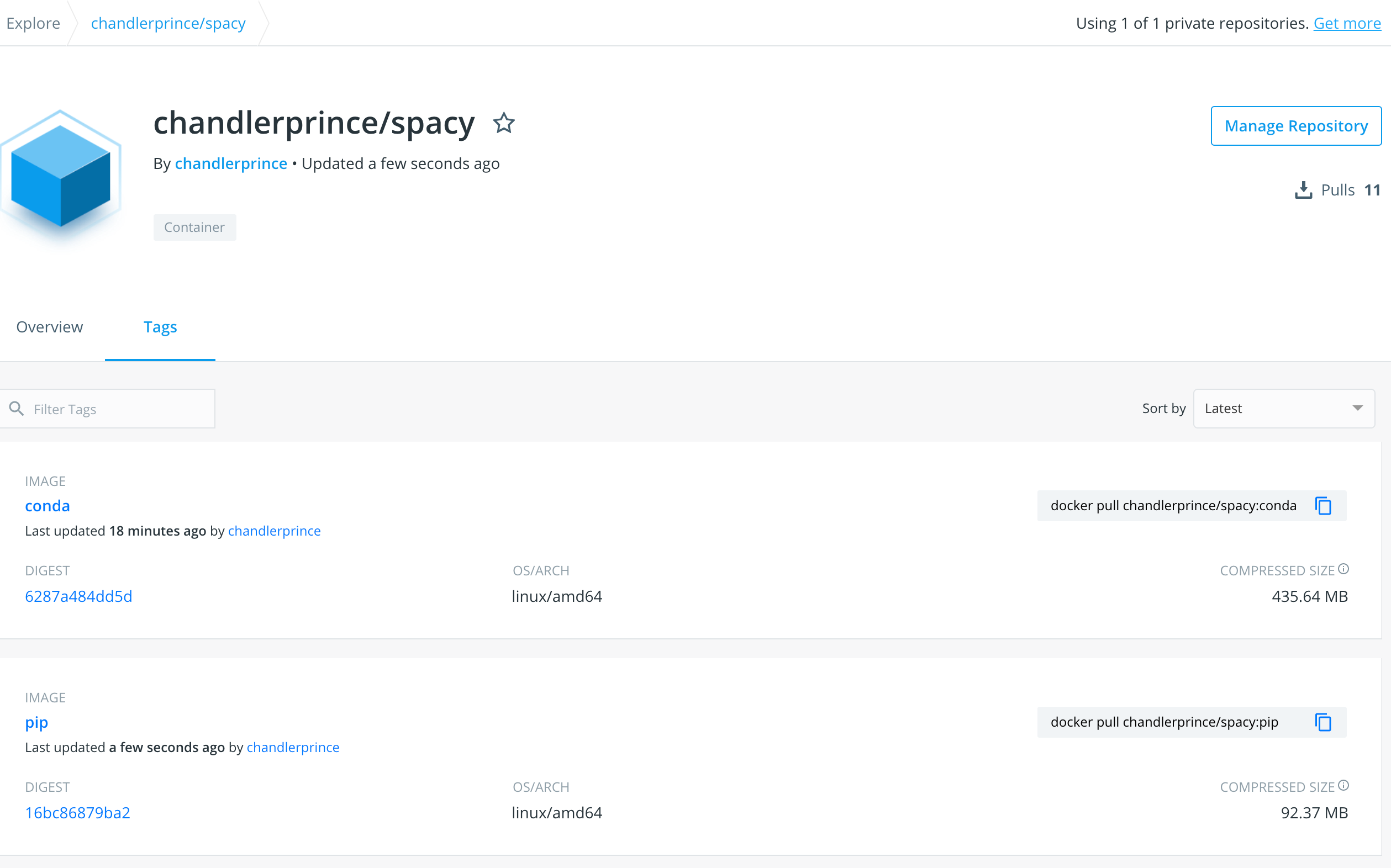

Spacy Industrial Strength Natural Language Processing In Python 1 spacy does not currently support python 3.13. there are no python 3.13 wheels yet. they yanked version 3.8.5 because it incorrectly specified support for python3.13 and reverted to version 3.8.4 as the stable release. do note that spacy 3.8.4 requires python <3.13, >=3.9. In order to load the model you need to download it first, if you are doing it on your local machine. (not on google colab). so after pip install u spacy you need to download using python m spacy download de core news sm then, nlp = spacy.load('de core news sm'). When you spacy download en, spacy tries to find the best small model that matches your spacy distribution. the small model that i am talking about defaults to en core web sm which can be found in different variations which correspond to the different spacy versions (for example spacy, spacy nightly have en core web sm of different sizes). I´m new to python and i ran into a problem i can´t solve. i would like to install and use the package spacy in python. therefore i opened cmd and ran pip install spacy while installing the depende.

Spacy Industrial Strength Natural Language Processing In Python When you spacy download en, spacy tries to find the best small model that matches your spacy distribution. the small model that i am talking about defaults to en core web sm which can be found in different variations which correspond to the different spacy versions (for example spacy, spacy nightly have en core web sm of different sizes). I´m new to python and i ran into a problem i can´t solve. i would like to install and use the package spacy in python. therefore i opened cmd and ran pip install spacy while installing the depende. If you want spacy v2 instead of spacy v3, install like this to get the most recent v2.x release (without having to know the exact version number): pip install "spacy~=2.0" this is currently spacy==2.3.7. similarly, if you need a specific minor version of v2 like v2.3, you can also use ~= to specify that: pip install "spacy~=2.3.0". 6 i have a spacy doc that i would like to lemmatize. for example: import spacy nlp = spacy.load('en core web lg') my str = 'python is the greatest language in the world' doc = nlp(my str) how can i convert every token in the doc to its lemma?. I want to include hyphenated words for example: long term, self esteem, etc. as a single token in spacy. after looking at some similar posts on stackoverflow, github, its documentation and elsewher. I have a couple of questions regarding the parameters that we define in the config.cfg file. although spacy's docs do try to explain them, i feel that the explanation isn't really descriptive enoug.

Spacy Industrial Strength Natural Language Processing In Python If you want spacy v2 instead of spacy v3, install like this to get the most recent v2.x release (without having to know the exact version number): pip install "spacy~=2.0" this is currently spacy==2.3.7. similarly, if you need a specific minor version of v2 like v2.3, you can also use ~= to specify that: pip install "spacy~=2.3.0". 6 i have a spacy doc that i would like to lemmatize. for example: import spacy nlp = spacy.load('en core web lg') my str = 'python is the greatest language in the world' doc = nlp(my str) how can i convert every token in the doc to its lemma?. I want to include hyphenated words for example: long term, self esteem, etc. as a single token in spacy. after looking at some similar posts on stackoverflow, github, its documentation and elsewher. I have a couple of questions regarding the parameters that we define in the config.cfg file. although spacy's docs do try to explain them, i feel that the explanation isn't really descriptive enoug.

Mac Python Install Spacy Gerasbook I want to include hyphenated words for example: long term, self esteem, etc. as a single token in spacy. after looking at some similar posts on stackoverflow, github, its documentation and elsewher. I have a couple of questions regarding the parameters that we define in the config.cfg file. although spacy's docs do try to explain them, i feel that the explanation isn't really descriptive enoug.