Word Embeddings In Nlp Pdf Artificial Intelligence Intelligence When it comes to word embeddings in deep learning tasks, the choice between pre trained embeddings and random initialization can significantly impact the performance of our nlp model. Prediction based embeddings are valuable for capturing semantic relationships and contextual information in text, making them useful for a variety of nlp tasks such as machine translation, sentiment analysis, and document clustering.

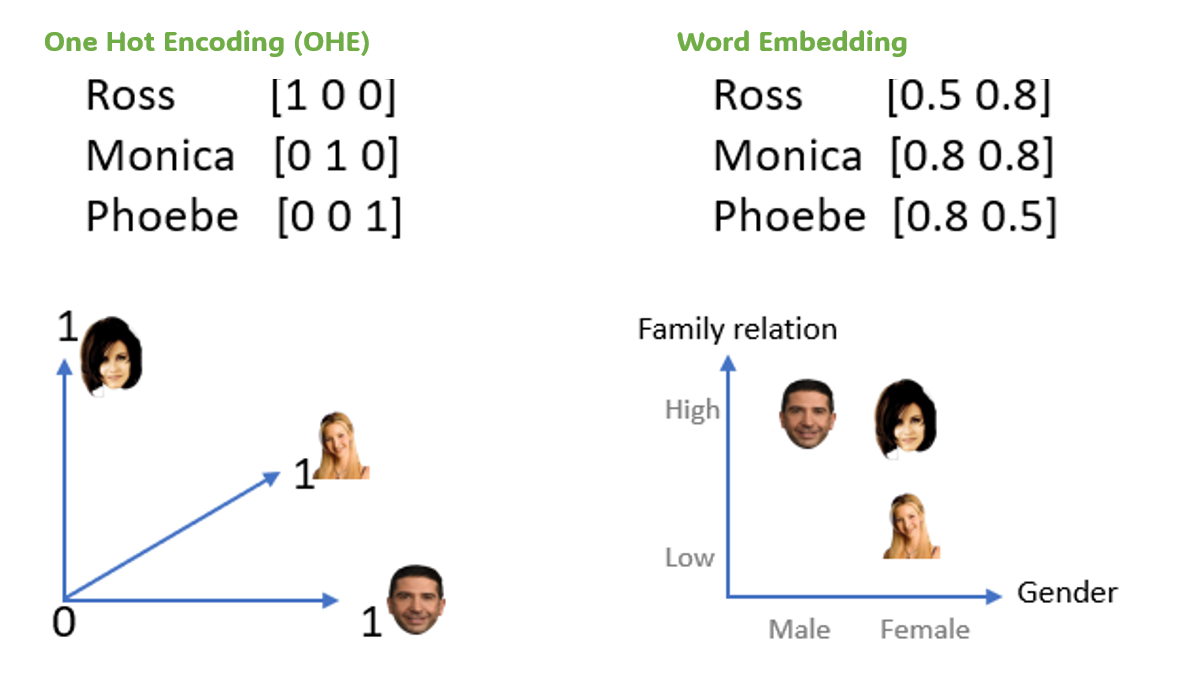

Text Representation And Word Embeddings For Nlp Tasks By Michal In natural language processing (nlp), text representation is the foundation for enabling machines to perform tasks like language translation, text summarization, and sentiment analysis. without an appropriate method of representing text, algorithms would be unable to process the unstructured nature of human language. Text representation turns human language into computer friendly formats. it's the backbone of natural language processing (nlp), making tasks like translation and sentiment analysis possible. here's the lowdown: text representation converts words and documents into numbers. this lets algorithms work their magic on text data. why does it matter?. Most of the proposed approaches start with various nlp steps that analyze requirements statements, extract their linguistic information, and convert them to easy to process representations, such as lists of features or embedding based vector representations. Natural language processing (nlp) has long been a fundamental area in computer science. however, its trajectory changed dramatically with the introduction of word embeddings. before embeddings, nlp relied primarily on rule based approaches that treated words as discrete tokens. with word embeddings, computers gained the ability to understand language through vector space representations. in.

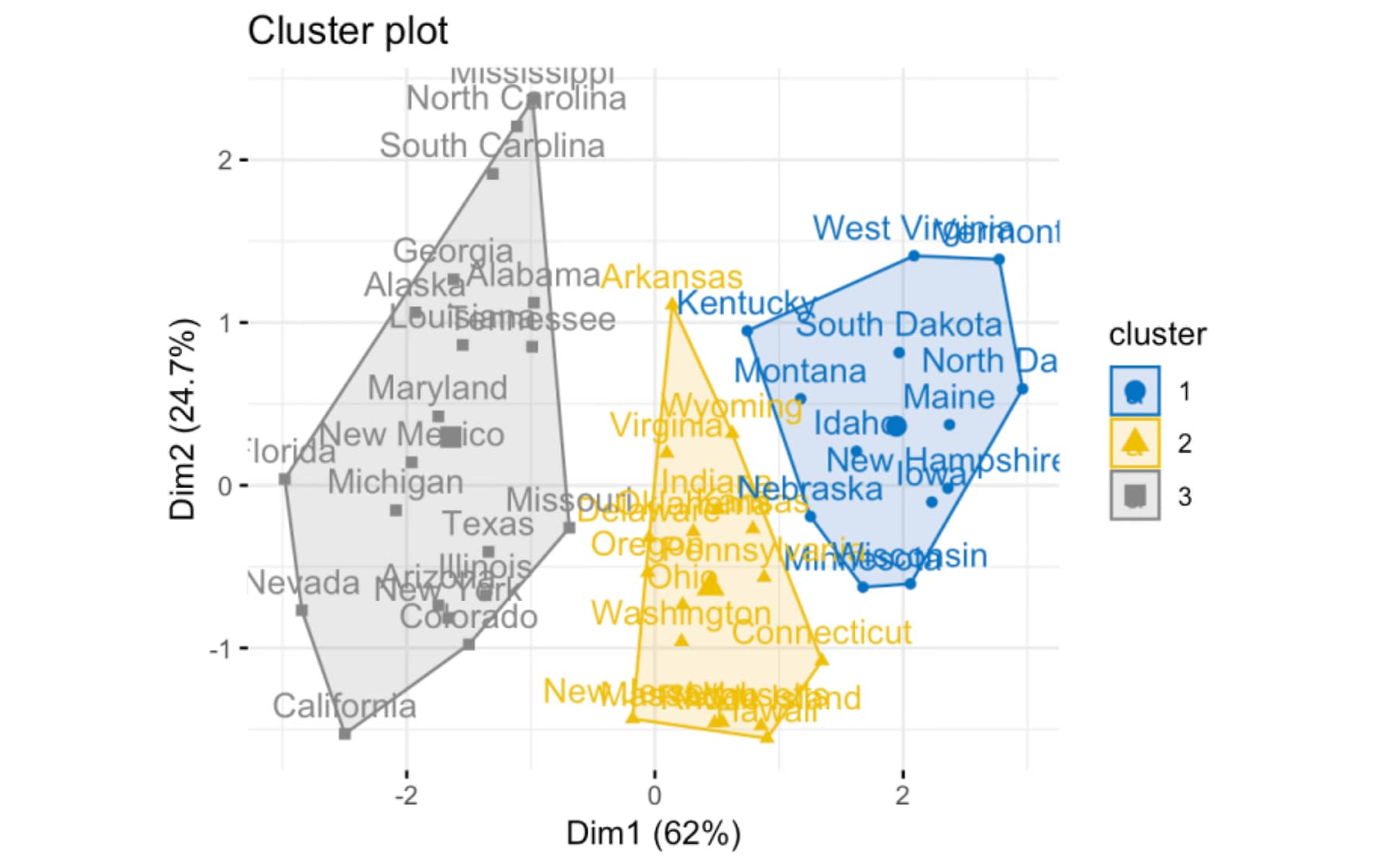

Nlp Unit 1 Pdf Machine Learning Artificial Intelligence Most of the proposed approaches start with various nlp steps that analyze requirements statements, extract their linguistic information, and convert them to easy to process representations, such as lists of features or embedding based vector representations. Natural language processing (nlp) has long been a fundamental area in computer science. however, its trajectory changed dramatically with the introduction of word embeddings. before embeddings, nlp relied primarily on rule based approaches that treated words as discrete tokens. with word embeddings, computers gained the ability to understand language through vector space representations. in. It explains how text can be represented numerically to enable machine processing, focusing on word2vec, glove, and transformer‐based embeddings. the appendix provides practical insights into using these methods for various nlp tasks. Below, we’ll overview what word embeddings are, demonstrate how to build and use them, talk about important considerations regarding bias, and apply all this to a document clustering task. the corpus we’ll use is melanie walsh’s collection of ~380 obituaries from the new york times. We will explore how these techniques convert text into numerical forms, enabling computers to perform complex nlp tasks such as sentiment analysis, topic modeling, and more. Text embeddings are generated by neural network models that learn to map text to vectors such that words with similar meanings have similar representations. these vectors contain a compressed version of the original text, preserving semantic properties, such as word similarity and syntactic relationships. 1. word embeddings.

Github Jordanearnest1 Nlp Word Embeddings Training A Model To Learn It explains how text can be represented numerically to enable machine processing, focusing on word2vec, glove, and transformer‐based embeddings. the appendix provides practical insights into using these methods for various nlp tasks. Below, we’ll overview what word embeddings are, demonstrate how to build and use them, talk about important considerations regarding bias, and apply all this to a document clustering task. the corpus we’ll use is melanie walsh’s collection of ~380 obituaries from the new york times. We will explore how these techniques convert text into numerical forms, enabling computers to perform complex nlp tasks such as sentiment analysis, topic modeling, and more. Text embeddings are generated by neural network models that learn to map text to vectors such that words with similar meanings have similar representations. these vectors contain a compressed version of the original text, preserving semantic properties, such as word similarity and syntactic relationships. 1. word embeddings.

Word Embeddings In Nlp A Complete Guide We will explore how these techniques convert text into numerical forms, enabling computers to perform complex nlp tasks such as sentiment analysis, topic modeling, and more. Text embeddings are generated by neural network models that learn to map text to vectors such that words with similar meanings have similar representations. these vectors contain a compressed version of the original text, preserving semantic properties, such as word similarity and syntactic relationships. 1. word embeddings.

A Comprehensive Guide To Word Embeddings In Natural Language Processing