Tokenization For Improved Data Security Overview And Process Of Tokenization, when applied to data security, is the process of substituting a sensitive data element with a non sensitive equivalent, referred to as a token, that has no intrinsic or exploitable meaning or value. the token is a reference (i.e. identifier) that maps back to the sensitive data through a tokenization system. Tokenization is the process of creating a digital representation of a real thing. tokenization can also be used to protect sensitive data or to efficiently process large amounts of data.

Tokenization For Improved Data Security Overview Of Security In data security, tokenization is the process of converting sensitive data into a nonsensitive digital replacement, called a token, that maps back to the original. tokenization can help protect sensitive information. for example, sensitive data can be mapped to a token and placed in a digital vault for secure storage. Tokenization is defined as the process of hiding the contents of a dataset by replacing sensitive or private elements with a series of non sensitive, randomly generated elements (called a token) such that the link between the token values and real values cannot be reverse engineered. Tokenization is a process by which pans, phi, pii, and other sensitive data elements are replaced by surrogate values, or tokens. tokenization is really a form of encryption, but the two terms are typically used differently. Tokenization may facilitate capital formation and enhance investors’ ability to use their assets as collateral. enchanted by these possibilities, new entrants and many traditional firms are embracing onchain products. as powerful as blockchain technology is, it does not have magical abilities to transform the nature of the underlying asset.

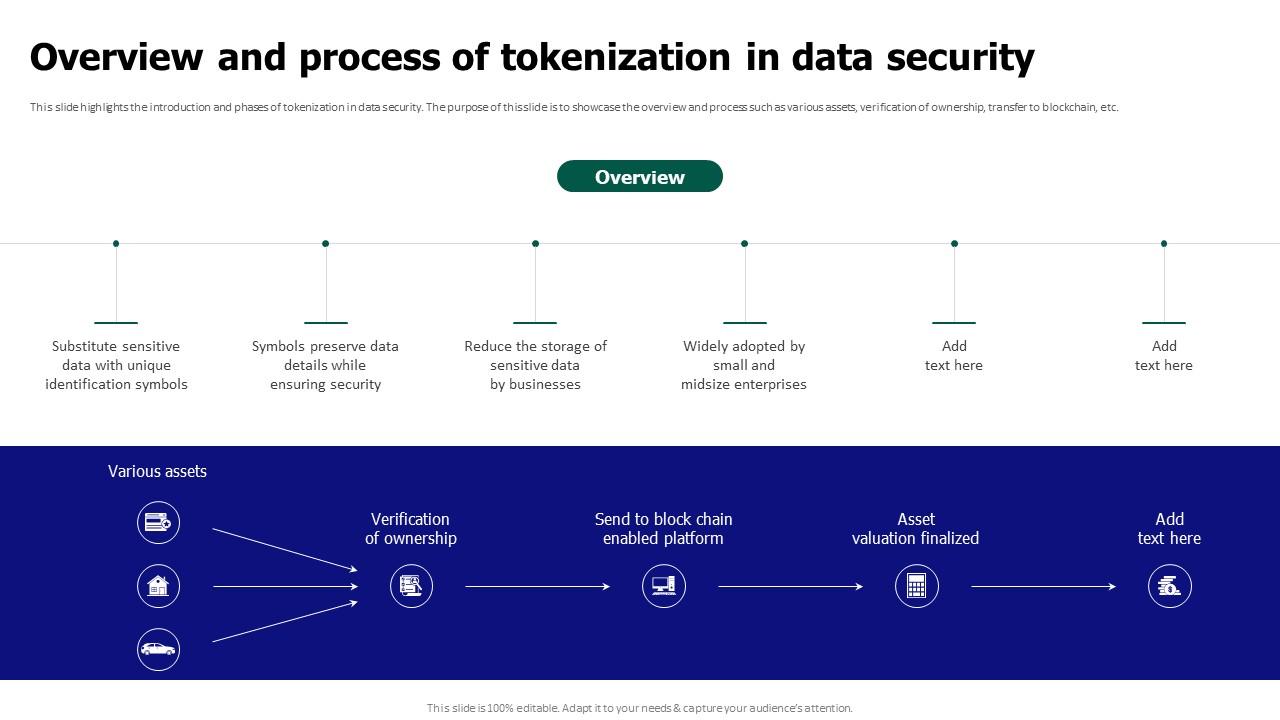

Tokenization For Improved Data Security Main Data Security Tokenization Tokenization is a process by which pans, phi, pii, and other sensitive data elements are replaced by surrogate values, or tokens. tokenization is really a form of encryption, but the two terms are typically used differently. Tokenization may facilitate capital formation and enhance investors’ ability to use their assets as collateral. enchanted by these possibilities, new entrants and many traditional firms are embracing onchain products. as powerful as blockchain technology is, it does not have magical abilities to transform the nature of the underlying asset. Data tokenization as a broad term is the process of replacing raw data with a digital representation. in data security, tokenization replaces sensitive data with randomized, nonsensitive substitutes, called tokens, that have no traceable relationship back to the original data. Tokenization is the process of replacing sensitive data with unique identifiers to enhance security. this process ensures that sensitive information, such as credit card numbers or personal identification details, is not exposed in plaintext format. Tokenization involves replacing sensitive data, such as credit card numbers or personal information, with randomly generated tokens. these tokens hold no intrinsic value, making them meaningless to anyone who intercepts them without access to the original information. What is tokenization? tokenization is the process of replacing sensitive, confidential data with non valuable tokens. a token itself holds no intrinsic value or meaning outside of its intended system and, without proper authorization, cannot be used to access the data it shields.

Tokenization For Improved Data Security Data Security Benefits Of Data tokenization as a broad term is the process of replacing raw data with a digital representation. in data security, tokenization replaces sensitive data with randomized, nonsensitive substitutes, called tokens, that have no traceable relationship back to the original data. Tokenization is the process of replacing sensitive data with unique identifiers to enhance security. this process ensures that sensitive information, such as credit card numbers or personal identification details, is not exposed in plaintext format. Tokenization involves replacing sensitive data, such as credit card numbers or personal information, with randomly generated tokens. these tokens hold no intrinsic value, making them meaningless to anyone who intercepts them without access to the original information. What is tokenization? tokenization is the process of replacing sensitive, confidential data with non valuable tokens. a token itself holds no intrinsic value or meaning outside of its intended system and, without proper authorization, cannot be used to access the data it shields.

Tokenization For Improved Data Security Overview And Benefits Of Tokenization involves replacing sensitive data, such as credit card numbers or personal information, with randomly generated tokens. these tokens hold no intrinsic value, making them meaningless to anyone who intercepts them without access to the original information. What is tokenization? tokenization is the process of replacing sensitive, confidential data with non valuable tokens. a token itself holds no intrinsic value or meaning outside of its intended system and, without proper authorization, cannot be used to access the data it shields.

Tokenization For Improved Data Security Salient Features Of