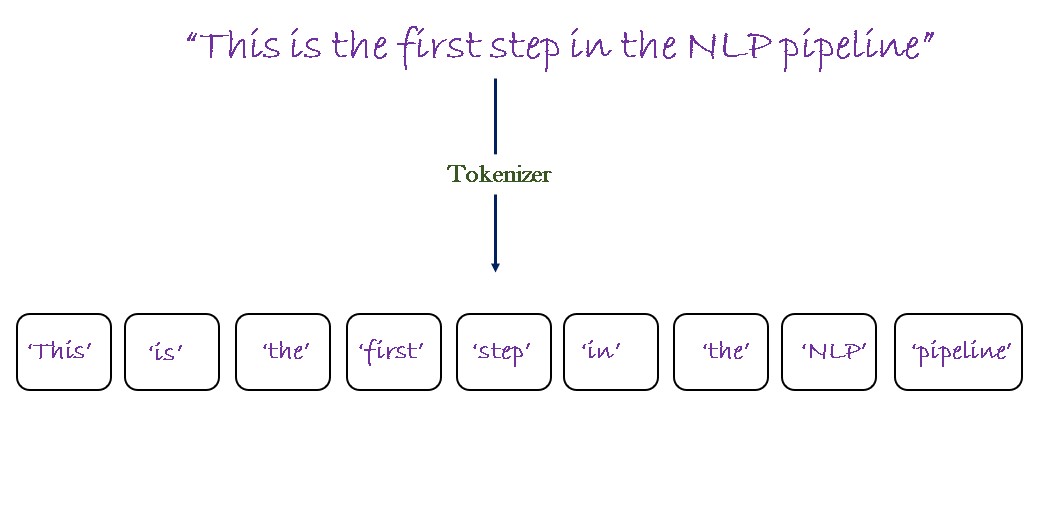

Tokenization In Nlp Types Challenges Examples Tools Vrogue Co Tokenization, when applied to data security, is the process of substituting a sensitive data element with a non sensitive equivalent, referred to as a token, that has no intrinsic or exploitable meaning or value. the token is a reference (i.e. identifier) that maps back to the sensitive data through a tokenization system. Tokenization is the process of creating a digital representation of a real thing. tokenization can also be used to protect sensitive data or to efficiently process large amounts of data.

Tokenization In Nlp Types Challenges Examples Tools N Vrogue Co In data security, tokenization is the process of converting sensitive data into a nonsensitive digital replacement, called a token, that maps back to the original. tokenization can help protect sensitive information. for example, sensitive data can be mapped to a token and placed in a digital vault for secure storage. Tokenization is defined as the process of hiding the contents of a dataset by replacing sensitive or private elements with a series of non sensitive, randomly generated elements (called a token) such that the link between the token values and real values cannot be reverse engineered. Tokenization is a process by which pans, phi, pii, and other sensitive data elements are replaced by surrogate values, or tokens. tokenization is really a form of encryption, but the two terms are typically used differently. Data tokenization is a method of protecting sensitive information by replacing it with a non sensitive equivalent — called a token — that has no exploitable meaning or value outside of its intended system.

Tokenization In Nlp Types Challenges Examples Tools N Vrogue Co Tokenization is a process by which pans, phi, pii, and other sensitive data elements are replaced by surrogate values, or tokens. tokenization is really a form of encryption, but the two terms are typically used differently. Data tokenization is a method of protecting sensitive information by replacing it with a non sensitive equivalent — called a token — that has no exploitable meaning or value outside of its intended system. Data tokenization as a broad term is the process of replacing raw data with a digital representation. in data security, tokenization replaces sensitive data with randomized, nonsensitive substitutes, called tokens, that have no traceable relationship back to the original data.