Understanding Bert Towards Ai Bert or bidirectional representation for transformers has proved to be a breakthrough in natural language processing and language understanding field. it has achieved state of the art results in different nlp tasks. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google. [1][2] it learns to represent text as a sequence of vectors using self supervised learning. it uses the encoder only transformer architecture.

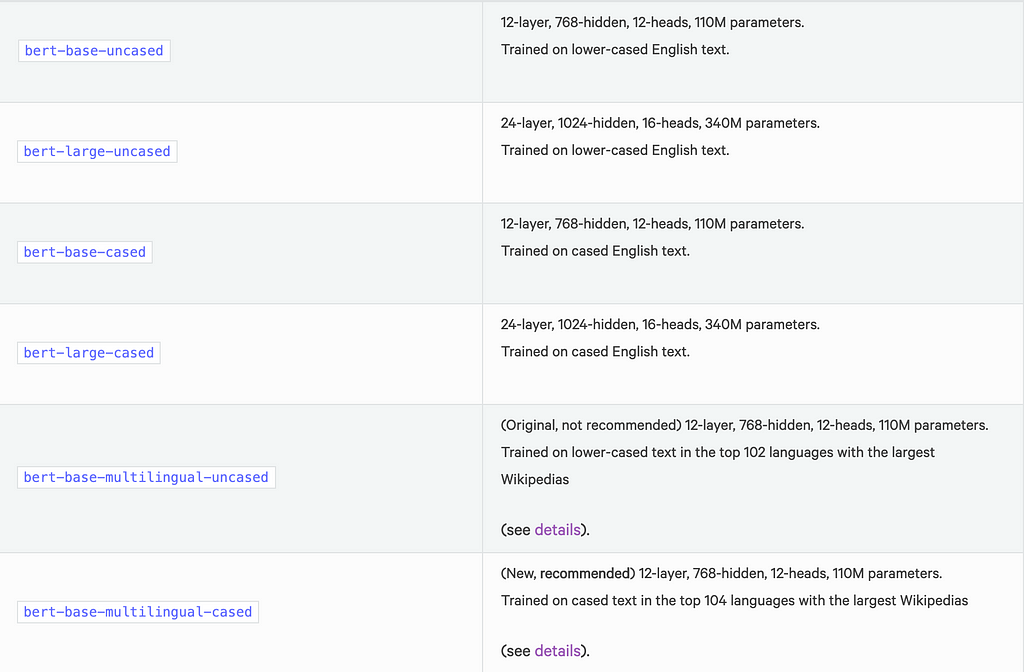

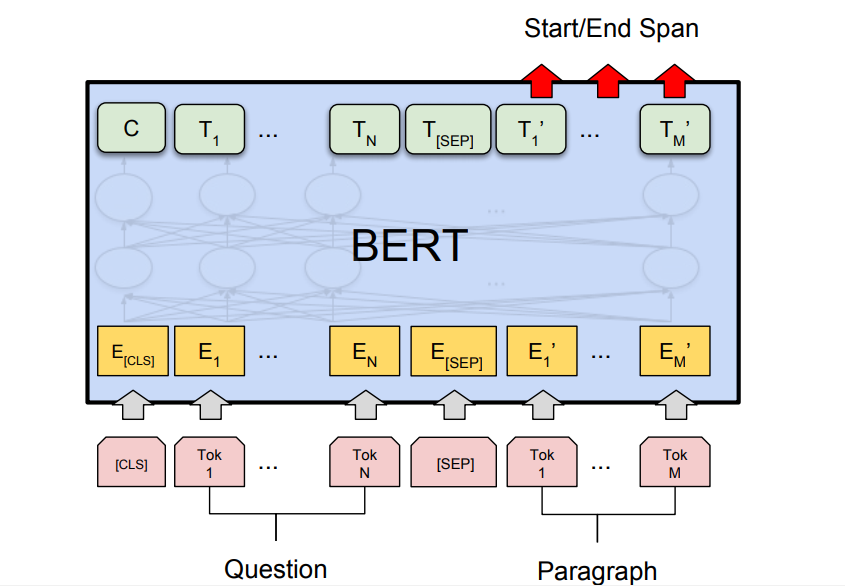

Understanding Bert Nlp Geeksforgeeks Bert, which stands for bidirectional encoder representations from transformers, is a groundbreaking model in the field of natural language processing (nlp) and deep learning. Bert revolutionized the nlp space by solving for 11 of the most common nlp tasks (and better than previous models) making it the jack of all nlp trades. in this guide, you'll learn what bert is, why it’s different, and how to get started using bert: what is bert used for? how does bert work? let's get started! 🚀. 1. what is bert used for?. Bert (bidirectional encoder representations from transformers) leverages a transformer based neural network to understand and generate human like language. bert employs an encoder only architecture. in the original transformer architecture, there are both encoder and decoder modules. Bert, which stands for bidirectional encoder representations from transformers, is a revolutionary model in the field of natural language processing (nlp). developed by google and introduced in 2018, bert has significantly improved the way machines understand and process human language.

Understanding Bert Nlp Geeksforgeeks Bert (bidirectional encoder representations from transformers) leverages a transformer based neural network to understand and generate human like language. bert employs an encoder only architecture. in the original transformer architecture, there are both encoder and decoder modules. Bert, which stands for bidirectional encoder representations from transformers, is a revolutionary model in the field of natural language processing (nlp). developed by google and introduced in 2018, bert has significantly improved the way machines understand and process human language. At the end of 2018 researchers at google ai language open sourced a new technique for natural language processing (nlp) called bert (bidirectional encoder representations from transformers). Bert surpasses traditional methods in several key areas: contextual understanding: unlike static embeddings, bert dynamically adjusts the representation of each word based on its context. bidirectionality: bert reads entire sequences in both directions simultaneously, capturing richer semantics. This paper will explore the design and uses of bert, contrasting it with other state of the art nlp models like as gpt 3 and roberta, and offering an example of its implementation using the hugging face transformers library. Bert, introduced by devlin et al. in the paper “bert: pre training of deep bidirectional transformers for language understanding”, represents a breakthrough in nlp by leveraging transformer architecture for bidirectional language modeling.

Understanding Bert Nlp Geeksforgeeks At the end of 2018 researchers at google ai language open sourced a new technique for natural language processing (nlp) called bert (bidirectional encoder representations from transformers). Bert surpasses traditional methods in several key areas: contextual understanding: unlike static embeddings, bert dynamically adjusts the representation of each word based on its context. bidirectionality: bert reads entire sequences in both directions simultaneously, capturing richer semantics. This paper will explore the design and uses of bert, contrasting it with other state of the art nlp models like as gpt 3 and roberta, and offering an example of its implementation using the hugging face transformers library. Bert, introduced by devlin et al. in the paper “bert: pre training of deep bidirectional transformers for language understanding”, represents a breakthrough in nlp by leveraging transformer architecture for bidirectional language modeling.

Understanding Bert Nlp Geeksforgeeks This paper will explore the design and uses of bert, contrasting it with other state of the art nlp models like as gpt 3 and roberta, and offering an example of its implementation using the hugging face transformers library. Bert, introduced by devlin et al. in the paper “bert: pre training of deep bidirectional transformers for language understanding”, represents a breakthrough in nlp by leveraging transformer architecture for bidirectional language modeling.