Tokenization Pdf Tokenization plays a crucial role in the processing of text for ai models, particularly in the context of large language models (llms). it serves as both the initial and final step in transforming human readable text into a format that models can interpret. Explore effective token management strategies for ai applications, enhancing security and efficiency in your workflows. tokenization plays a crucial role in the processing of text for ai applications, particularly in the context of large language models (llms).

Understanding Tokenization In Ai Restackio Tokenization plays a pivotal role in various applications of ai models, particularly in natural language processing (nlp). by breaking down text into manageable tokens, we can leverage these tokens to create text embeddings, which serve as dense vector representations of the original text. Tokenization is a critical process in the functioning of large language models (llms), enabling them to interpret and generate human language effectively. this section delves into the intricacies of how tokenization operates within llms, particularly focusing on the functionality of ai tokenizers. Tokenization strategies play a crucial role in how text is processed and understood in various applications, particularly when integrating generative ai. the choice of tokenization method can significantly influence the effectiveness of keyword searches and filtering mechanisms. Discover how tokenization works in nlp a technique that takes raw text and turns it into the power source of generative ai and intelligent search.

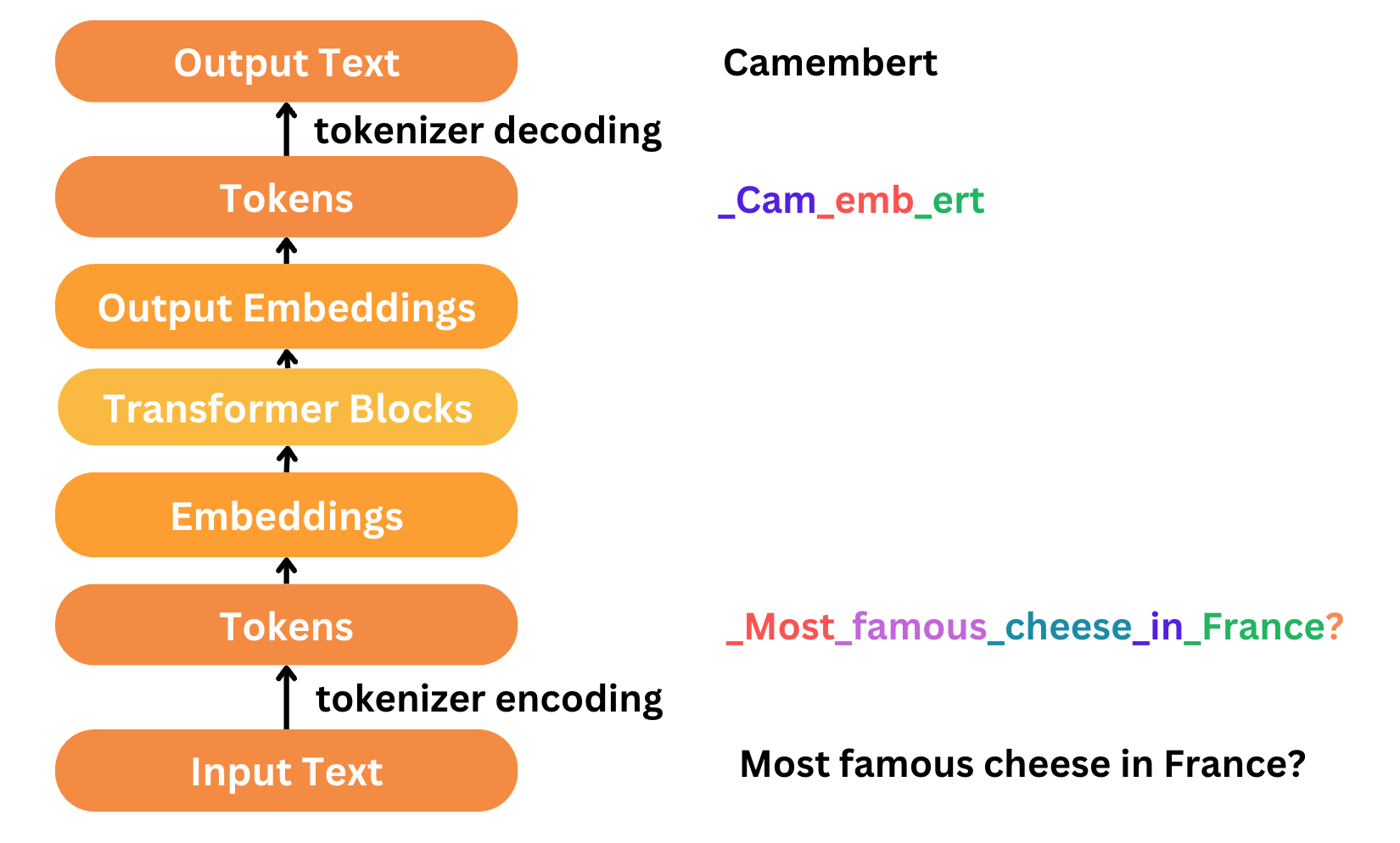

Ai Tokenization Understanding Its Importance And Applications Tokenization strategies play a crucial role in how text is processed and understood in various applications, particularly when integrating generative ai. the choice of tokenization method can significantly influence the effectiveness of keyword searches and filtering mechanisms. Discover how tokenization works in nlp a technique that takes raw text and turns it into the power source of generative ai and intelligent search. Tokenization is a critical process in ai that transforms text into a format that models can understand. this section delves into the intricacies of tokenization, particularly in the context of large language models (llms). Understanding tokenization is essential for anyone working with ai models. by grasping the underlying processes and tools, practitioners can better leverage these technologies to enhance their applications. restack offers a full developer toolkit, including a ui to visualize and replay workflows. Tokenization is a critical process in the realm of large language models (llms), serving as both the initial and final step in text processing. it transforms human readable text into a format that models can interpret, specifically numerical representations known as tokens. Explore how data tokenization enhances security and privacy in ai applications, ensuring safe data handling and compliance.

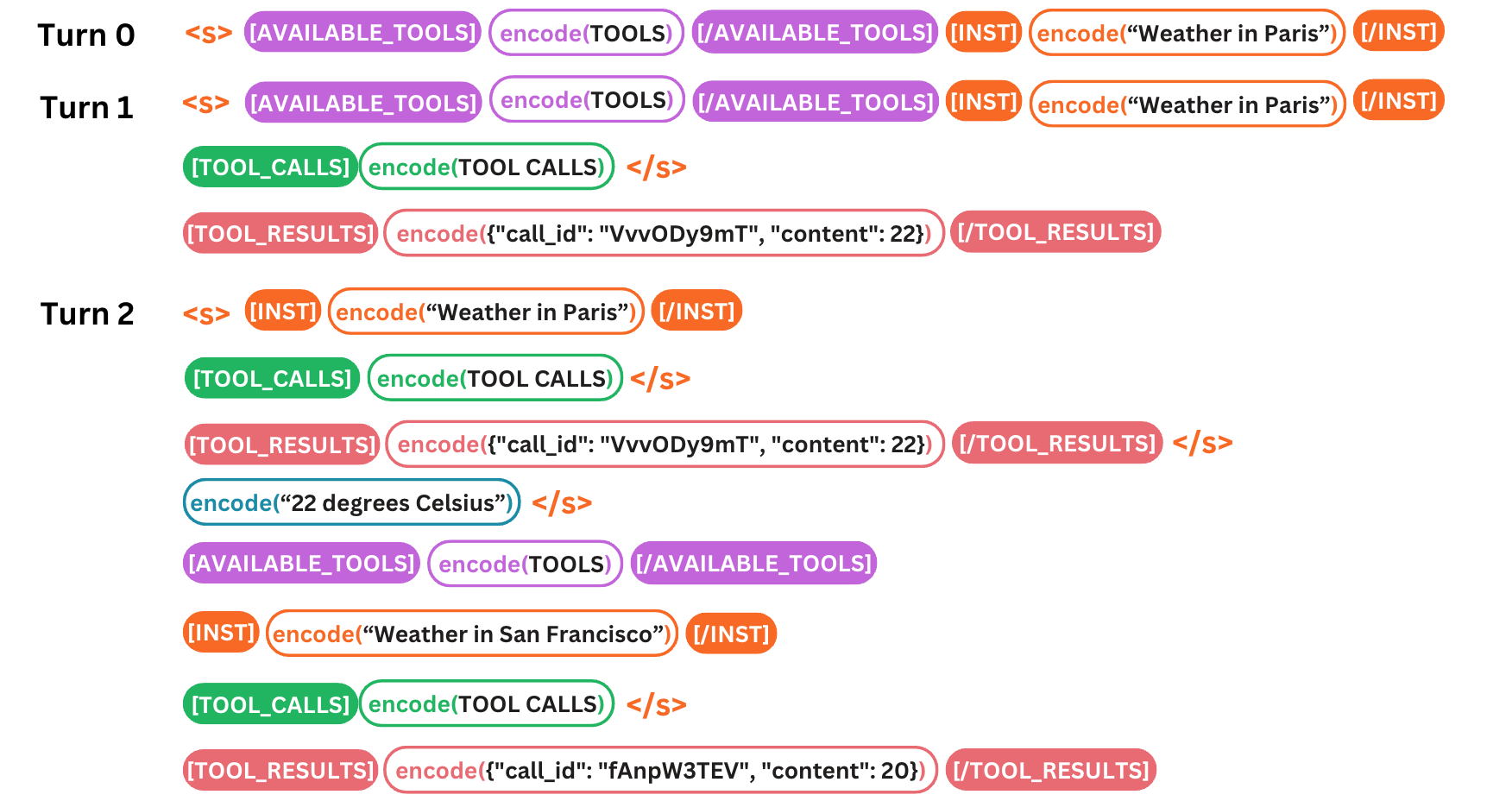

Tokenization Mistral Ai Large Language Models Tokenization is a critical process in ai that transforms text into a format that models can understand. this section delves into the intricacies of tokenization, particularly in the context of large language models (llms). Understanding tokenization is essential for anyone working with ai models. by grasping the underlying processes and tools, practitioners can better leverage these technologies to enhance their applications. restack offers a full developer toolkit, including a ui to visualize and replay workflows. Tokenization is a critical process in the realm of large language models (llms), serving as both the initial and final step in text processing. it transforms human readable text into a format that models can interpret, specifically numerical representations known as tokens. Explore how data tokenization enhances security and privacy in ai applications, ensuring safe data handling and compliance.